Thinking out loud here, because I just had an interesting idea for another way to approach writing the representer that might be vastly more powerful than the current way. Perhaps through writing this or receiving comments from any of you, it can be determined whether it could work.

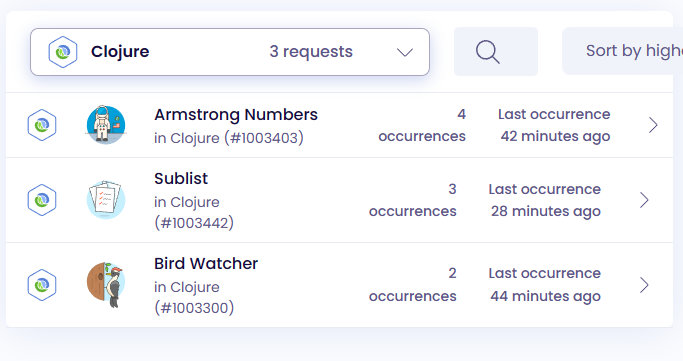

The problem is that although my current macroexpansion based normalization strategy seems to work, it still leaves way too much variation between normalized solutions to be very useful for separating them into approaches, which is indeed the entire point of a representer. For example, when I excitedly ran it against 500 solutions for armstrong-numbers, it still resulted in something like 470 unique representations!

The only obvious way to improve upon this implementation would be to perform additional massaging of the resulting data, such as collapsing redundant do blocks and such. But this feels too much like painstakingly “chipping away” at the code for only minor incremental improvements. So I’ve been brainstorming whether there could be a better way.

Ever since we first started talking about these darn things called representers, a little voice in the back of my head has been whispering,

“Logic programming”.

That sounds kind of cool I guess, but what exactly made me think of that? For one thing, I’ve seen a couple of presentations about logic programming (I think by Wiiliam Byrd, Nada Amin, and Bodhil Stokke) where they performed absolutely wonderous things including writing code backwards, i.e. giving the compiler a set of inputs and desired return value and watching the program quite magically return the function.

I also happen to know of a library called kibit. From the readme:

kibit is a static code analyzer . . . [which] uses core.logic to search for patterns of code that could be rewritten with a more idiomatic function or macro.

This sounds like it could actually be quite useful just on its own, as part of the analyzer perhaps. But I’m thinking much more could probably be learned by studying it to see exactly how core.logic is used to this effect.

But that’s not even the idea that I want to try first… this one was inspired by re-find by Michiel Borkent (the legendary borkdude), which is powered by clojure.spec. It allows you to find functions via reverse-lookup, by providing the desired arguments and return spec.

clojure.spec is a data specification library that serves as Clojure’s answer to not having type annotations, and is powered by generative testing using test.check (which is a port of QuickCheck for Haskell).

I’m thinking that spec could be used to determine whether a given function in a solution conforms to that of one in another solution.

But how do we do that without specifying the first one?

There’s another library (enough libraries for you?) called spec-provider that does just that, it infers Clojure specs from sample data. Inspired by F#'s type providers, it has experimental support for inferring the spec of functions.

IDK, I might be a bit too optimistic about the possibility of this actually working. But at least I’ll surely learn something while trying…