I think it should be possible to read off the database whether mentoring is a good experience. For example, if there are lots of users who were mentored a few times but then stopped requesting mentoring while remaining active on the site, there might be something going wrong.

I agree - for me also, the mentoring is a lot more helpful/needed when I struggle on a problem - rather than going to the forum and posting there, asking a mentor for help would be helpful and would be a great boost to motivation for people who are new and struggling. So, I recommend that the mentoring be available even when you fail the test (which is possible as I tried it once on an exercise and i was able to submit to a mentor)

Late to the party but thought I’d add my experience. I’m pretty new to exercism and haven’t yet taken advantage of the mentoring feature yet. One reason for that is that most of the exercises that I’ve done have been pretty basic (I’ve completed 11 exercises from the Elixir track and all but 1 have been “learning exercises”). I’ve made several changes to my own code after reviewing the community solutions but so far I haven’t felt that mentoring would add much beyond that since there isn’t much code to review (perhaps I’m missing out though).

The problem with this is that you’re basing your knowledge of what you struggle with or not on your own perception. You might think you’re writing amazing code in a language, when actually it’s terrible. We see this all the time. Its the “you don’t know what you don’t know” principle. It’s the main value of mentoring, and I wish I could get everyone to see this somehow ![]()

Yeah - the challenge with this is that ever beginner struggling to solve an exercise would suddenly want a mentor and we’d be overloaded overnight. I agree in principle with the feature, but I don’t know how to sanely implement it. Also, that sort of mentoring requires much more “real time” support - not async, post a comment once a day stuff, I think.

I feel like maybe there’s an opportunity here to create a chat space inside the editor and send invite links to others to talk with them in there. Then they could post a link in the Discord and someone could jump in real-time and help.

I added a thread on the previous idea here: Synchronous conversation in the editor when you get stuck?

Note to self: Let’s try and get this conversation back on track re being about the potential renaming now ![]()

I’ve had many mentees tell me that they’re amazed at how much they’ve learned from a basic exercise. You could open a mentor request and ask if a mentor sees anything worth discussing.

I’m with Andre here - I am skeptical that a rename will overcome what Matthijs gets at - there are a significant number of people who are hesitant to share their code (or don’t feel the need to for whichever reason).

“code review” feels very judge-y to me, and may or may not discourage people. It all depends on their perception of (and experiences with) the term.

But testing a rename out would at least give us data. ![]()

Some other “names” that come to mind:

- Get a critique from a mentor (again, judge-y)

- Get a review from a code coach (I don’t like the sports metaphor, but it feels friendlier)

- Work with a reviewer to make your code better

- Work with a mentor to make your code better

- Get coaching to improve your solution

- Get feedback on improving your code

- Improve your code with code review

- Make your code better with a code review

In addition to that list: code discussion / «discuss your solution/code with a mentor».

Part of Exercism’s take on the exercises is to gain fluency in a language. One of the areas that a “student” of the track may lack is what kind of things in a given exercise lends itself to such a fluency.

Every excercise, including TwoFer or Gigasecond which are usually easy (compared to later exercises, at least) to solve, can give a chance to really explore the fluency aspect, as the problem is simple enough that exploring the language itself does not combat against solving the problem given.

The practice exercises are for practice, and if you believe you got enough out of it, that is fine, really. But I think sometimes we short-change ourselves by not reaching out and saying “Is there anything that I am missing here, other ways that I can explore the language given this exercise? Are there some choices I made without realizing, maybe, that I even had a choice?”

I am a struggler: to me ‘code review’ sounds terribly intimidating. It’s what proficient, equally competent coders do amongst themselves because no one’s perfect, no one has all the possible enhancements figured out, all the traps avoided. I think that we strugglers need guidance – mentoring, coaching, teaching, not ‘code review’. But others here, dabbling in their 14th language seem a bit insulted at the notion of someone ‘mentoring’ them. Perhaps one asks for ‘code review’ in practice exercises, ‘mentoring’ when in ‘learning mode’? I also feel that allowing mentoring only for passing code excludes the casual struggler who might not even know what the ‘command line’ is, but might become a serious student with a little bit of good mentoring; I like to think that ‘Exercism is for everyone’

What about some A-B testing? Compile a list of phrases (“Request mentoring”, “Discuss code with a mentor”, “Request code review”, etc) and see which randomly selected phrases gets the most results? Or is that asking too much? ![]()

Jeremy ,

While we are on this topic, I wanted to ask about the right time to ask for mentoring. Initially I would submit the request for mentoring as soon as I finish an exercise. Then I would head out to community solutions and start reviewing them. For many of the mentoring requests, the suggestions made by the mentors were already covered in the community solutions. So, I did not feel that they added much value.

Then, I tried reviewing the community solutions and for many of the exercises, I felt I got what I wanted, but still I submitted for mentorship and I did not get any meaningful feedback from my mentors.

But there were other cases where I had engaged in a long conversation with a mentor and found it very enriching. But most of the mentors I got did not provide any meaty suggestions - so, I am wondering how to strike a balance between community solutions and learning on my own and using a mentor? I would appreciate your thoughts on this.

As a mentor, I am just as happy to receive requests of the form

I am content with my own solution, and I see X, Y, and Z in the community solutions and that all makes sense to me. The only question I have is: is there anything else to this exercise that I might find interesting?

Such requests are appropriately easy to handle: if there isn’t then the answer is “Nope, you’re good!”, and if there is then I can elaborate liberally.

If you suspect another mentor might have something interesting to say, you can resubmit your request.

After reading all the above replies, i would like to see something along these lines:

Rename to something that is neutral, like “Discuss with mentor”, “Get feedback”. When we click on that we get a modal and now we can select anything that applies, like:

- I want to improve my code

- I want a code review

- I have looked at the community solutions

- Other: Specify

Something like that would help both mentors and students.

It seems like there are plenty of good reasons for and against rebranding mentorship. Does this have to be a binary decision? What about adding a manual code review option? It could feed into the current mentoring pipeline. Keep code reviews brief and mentoring as is. Hopefully, this solution would keep the people who like mentoring and add those people who would prefer code review. ![]()

Adding some nuance to what being mentored means might open the door wider for those hesitant to engage in what they may perceive as a mentoring relationship. Suppose we offered a menu of typical mentoring questions and maybe an indication of what to expect with each option. In that case, those who don’t feel worthy or aren’t initially trying to become an expert might be more inclined to make the request.

That is lovely - will use this - thanks a lot Matthijs

@IsaacG Probably asking too much ![]() I’d like to, but realistically it’s more time than I have right now.

I’d like to, but realistically it’s more time than I have right now.

@siraj-samsudeen Good question! I agree with what @MatthijsBlom said. I think it’s probably always ok to ask the question and see what happens. A big point of mentoring is that we don’t know our own knowledge gaps so sometimes someone will see something in our code that we haven’t noticed ourselves. But also, sometimes the community solutions do a great job in showing us those things ourselves. The big question I’d ask is “Why did you not think of the perfect solution yourself?” - writing the code eventually isn’t the key thing, it’s about thinking in the correct way in the first place, and so maybe working with mentors to help determine how you can think more idiomatically is the key thing here.

We’ve done two things:

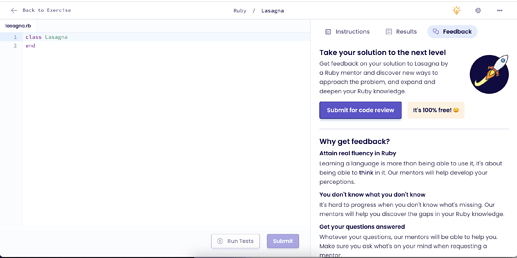

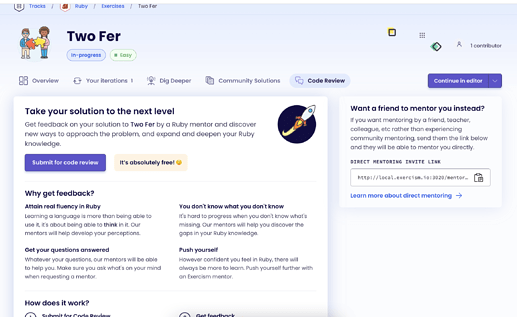

- Added a feedback tab to the editor where automated feedback and mentoring both appear. This is useful for being able to reference feedback once a mentoring session is in progress (and is the foundation for some other improvements coming soon). It’s also another opportunity to nudge someone to improve their code.

- Changed some of the labels to say “Submit for Code Review” rather than “Request Mentoring”. Let’s see what happens and we can change it back after a while if we don’t think it helps. “Mentors” are still “mentors”.

I also strongly agree with the opinion that code review should be mentoring. I’d argue that code review that doesn’t include useful discussion or mentoring isn’t being done well (and is effectively just a second line of CI). Code Review IMO should be an opportunity for a discussion about how best to solve a problem and write code, not an exam-marking exercise. So I’m happy to use this language here, at least as an experiment ![]()

This stuff will be rolling out in the next day or two. Thanks to everyone for contributing to the discussion. It’s been very helpful to read.

Here are two screenshots of the changes:

I think the renaming sounds good, but it highlights a weakness of the platform as it currently stands. People want/need/ and CRAVE actual mentoring. How will we fill this void??

Under 1% of people submitting exercises request mentoring. Despite us plastering it everywhere offering it them for free, emailing them about it any more. So clearly there’s some sort of huge disconnect between the people craving it, and the people requesting it.

Often though, disconnects like this are based in lack of clarity about a process, fear of failure/rejection, or similar. So us focussing more heavily on telling people it’s safe and easy is probably the first way we should start. I suspect “Code Review” as a concept makes the process immediately clearer and therefore might lead to an uptick. But it might not and we might need to focus more heavily in other ways!

I’ve just deploying this. Let’s see what happens!